This post follows on from configuring PKI Server, and explains how to configure the HTTPD server, explains how to use it, and gives some hints on debugging it when it goes wrong.

Having tried to get this working (and fixing the odd bug) I feel that this area is not as well designed as it could have been, and I could not get parts of it to work.

For example

- You cannot generate browser based certificate request because the <keygen> html tag was removed around 2017, and the web page fails. See here. You can use 1-Year PKI Generated Key Certificate instead, so not a big problem now we know.

- The TLS cipher specs did not have the cipher specs I was using.

- I was expecting a simple URL like https://10.1.1.2/PKIServer/Admin. You have to use https://10.1.1.2/PKIServ/ssl-cgi-bin/camain.rexx, which exposes the structure of the files. You can go directly go to the Admin URL using https://10.1.1.2/PKIServ/ssl-cgi-bin/auth/admmain.rexx, which is not very elegant.

- For an end user to request a certificate you have to use https://10.1.1.2/Customers/ssl-cgi-bin/camain.rexx.

- There seem to be few security checks.

- I managed to get into the administrative panels and display information using a certificate mapping to a z/OS userid, and with no authority!

- There are no authority checks for people requesting a certificate. This may not be an exposure as the person giving the OK should be checking the request.

- There were no security checks for administration functions. (It is easy to add them).

- You can configure HTTPD to use certificates for authentication and fall back to userid and password.

- There is no FallbackResource specified. This is a default page which is displayed if you get the URL wrong.

- The web pages are generated dynamically. These feel over engineered. There was a problem with one of the supplied pages, but after some time trying to resolve the problem, I gave up.

I’ll discuss how to use the web interface, then I’ll cover the improvements I made to make the HTTP configuration files meet my requirements, and give some guidance on debugging.

You may want to use a HTTPD server just for PKI Server, or if you want to share, then I suggest you allocate a TLS port just for PKI Server.

URL

The URL looks like

https://10.1.1.2:443/PKIServ/ssl-cgi-bin/camain.rexx

where (see Overview of port usage below for more explanation)

- 10.1.1.2 is the address of my server

- port 443 is for TLS with userid and password authentication

- PKIServ is the part of the configuration. If you have multiple CA’s this will be CA dependant.

- ssl-cgi-bin is the “directory” where …

- camain.rexx the Rexx program that does the work.

With https:10.1.1.2:443/Customers/ssl-cgi-bin/camain.rexx this uses the same camain.rexx as for PKIServ, but in the template for displaying data, it uses a section with the same name (Customers) as the URL.

Overview of port usage

There are three default ports set up in the HTTPD server for PKI Server. I found the port set-up confusing, and not well document. I’ve learned (by trial and error) that

- port 80 (the default for non https requests) for unauthenticated requests, with no TLS session protection. All data flows as clear text. You many not want to use port 80.

- port 443 (the default for https requests) for authentication with userid and password, with TLS session protection

- port 1443 for certificate authentication, with TLS Session protection. Using https://10.1.1.2:443/PKIServ/clientauth-cgi/auth/admmain.rexx, internally this gets mapped to https://10.1.1.2:1443/PKIServ/clientauth-cgi-bin/auth/admmain.rexx. I cannot see the need for this port and its configuration.

and for the default configuration

- port:/PKIServ/xxx is for administrators

- port:/Customers/xxx is for an end user.

and xxx is

- clientauth-cgi. This uses TLS for session encryption. Port 1443 runs with user SAFRunAs PKISERV. All updates are done using the PKISERVD userid, this means you do not need to set up the admin authority for each userid. There is no security checking enabled. I was able to process certificates from a userid with no authority!

- ssl-cgi-bin. This uses port TLS and 443. I had to change the file to be SAFRunAs %%CERTIF%% as $$CLIENT$$ is invalid. You have to give each administrator ID access to the appropriate security profiles.

- public-cgi. This is used by some insecure requests, such as print a certificate.

I think the only one you should use is ssl-cgi-bin.

Accessing the services

You can start using

These both give a page with

- Administration Page. This may prompt for your userid and password, and gives you a page

- Customer’s Home Page. This gives a page https://10.1.1.2/Customers/ssl-cgi-bin/camain.rexx? called PKI Services Certificate Generation Application. This has functions like

- Request a new certificate using a model

- Pickup a previously requested certificate

- Renew or revoke a previously issued browser certificate

Note: You cannot use https://10.1.1.2:1443/PKIServ/ssl-cgi-bin/camain.rexx, as 1443 is not configured for this. I could access the admin panel directly using https://10.1.1.2:1443/PKIServ/ssl-cgi-bin/auth/admmain.rexx

I changed the 443 definition to support client and password authentication by using

- SSLClientAuth Optional . This will cause the end user to use a certificate if one is available.

- SAFRunAs %%CERTIF%% . This says use the Certificate authentication when available, if not prompt for userid and password.

Certificate requests

I was able to use the admin interface and display all certificate requests.

Request a new certificate using a model.

I tried to use the model “1 Year PKI SSL Browser Certificate“. This asks the browser to generate a private/public key (rather than the PKIServer generating them). This had a few problems. Within the page is a <KEYGEN> tag which is not supported in most browsers. It gave me

- The field “Select a key size” does not have anything to select, or type.

- Clicking submit request gave me IKYI003I PKI Services CGI error in careq.rexx: PublicKey is a required field. Please use back button to try again or report the problem to admin person to

I was able to use a “1 Year PKI Generated Key Certificate“

The values PKIServ and Customer are hard-coded within some of the files.

If you want to use more than one CA, read z/OS PKI Services: Quick Set-up for Multiple CAs. Use this book if you want to change “PKIServ” and “Customer”.

Colin’s HTTPD configuration files.

Because I had problems with getting the supplied files to work, I found it easier to restructure, parameterise and extend the provided files.

I’ve put these files up to github.

Basic restructure

I restructured and parametrised the files. The new files are

- pki.conf. You edit this to define your variables.

- 80.conf contains the definitions for a general end user, not using TLS. So the session is not encrypted. Not recommended.

- 443.conf the definitions for the TLS port. You should not need to edit this while you are getting started. If you want to use multiple Certificate Authorities, then you need to duplicate some sections, and add definitions to the pki.conf file. See here.

- 1443.conf the definitions for the TLS port for the client-auth path. You should not need to edit this while you are getting started. If you want to use multiple Certificate Authorities, then you need to duplicate some sections, and add definitions to the pki.conf file. See here.

- Include conf/pkisetenv.conf to set some environment variables.

- pkissl.conf. The SSL definitions have been moved to this file, and it has an updated list of cipher specs.

The top level configuration file pki.conf

The top level file is pki.conf. It has several sections

system wide

# define system wide stuff

# define my host name

Define sdn 10.1.1.2

Define PKIAppRoot /usr/lpp/pkiserv

Define PKIKeyRing START1/MQRING

Define PKILOG “/u/mqweb3/conf”

# The following is the default

#Define PKISAFAPPL “OMVSAPPL”

Define PKISAFAPPL “ZZZ”

Define serverCert “SERVEREC”

Define pkidir “/usr/lpp/pkiserv”

#the format of the trace entry

Define elf “[%{u}t] %E: %M”

Defined the CA specific stuff

# This defines the path of PKIServ or Customers as part of the URL

# This is used in a regular expression to map URLs to executables.

Define CA1 PKIServ|Customers

Define CA1PATH “_PKISERV_CONFIG_PATH_PKIServ /etc/pkiserv”

#Define the port for TLS

Define CA1Port 443

# specify the groups which can use the admin facility

Define CA1AdminAuth ” Require saf-group SYS1 “

other stuff

LogLevel debug

ErrorLog “${PKILOG}/zzzz.log”

ErrorLogFormat “${elf}”

# uncomment these if you want the traces

#Define _PKISERV_CMP_TRACE 0xff

#Define _PKISERV_CMP_TRACE_FILE /tmp/pkicmp.%.trc

#Define _PKISERV_EST_TRACE 0xff

#Define _PKISERV_EST_TRACE_FILE /tmp/pkiest.%.trc

#Include the files

Include conf/80.conf

Include conf/1443.conf

Include conf/443.conf

The TLS configuration file

The file 443.conf has several parts. It uses the parametrised values above, for example ${pkidir} is substituted with /usr/lpp/pkiserv/. When getting started you should not need to edit this file.

Listen ${CA1Port}

<VirtualHost *:${CA1Port}>

#define the log file for this port

ErrorLog “${PKILOG}/z${CA1Port}.log

DocumentRoot “${pkidr}”

LogLevel Warn

ErrorLogFormat “${elf}”

Include conf/pkisetenv.conf

Include conf/pkissl.conf

KeyFile /saf ${PKIKeyRing}

SSLClientAuth Optional

#SSLClientAuth None

RewriteEngine On

# display a default page if there are problems

# I created it in ${PKIAppRoot}/PKIServ,

# (/usr/lpp/pkiserv/PKIServ/index.html)

FallbackResource “index.html”

Below the definitions for one CA are defined. If you want a second CA, then duplicate the definitions,and change CA1 to CA2.

Notes on following section.

# Start of definitions for a CA

<IfDefine CA1>

SetEnv ${CA1PATH}

RewriteRule ¬/(${CA1})/ssl-cgi/(.) https://${sdn}/$1/ssl-cgi-bin/$2 [R,NE]

RewriteRule ¬/(${CA1})/clientauth-cgi/(.) https://${sdn}:1443/$1/clientauth-cgi-bin/$2 [R,NE,L]

ScriptAliasMatch ¬/(${CA1})/adm(.).rexx(.) “${PKIAppRoot}/PKIServ/ssl-cgi-bin/auth/adm$2.rexx$3

ScriptAliasMatch ¬/(${CA1})/Admin “${PKIAppRoot}/PKIServ/ssl-cgi-bin/auth/admmain.rexx”

ScriptAliasMatch ¬/(${CA1})/EU “${PKIAppRoot}/PKIServ/ssl-cgi-bin/camain.rexx”

ScriptAliasMatch ¬/(${CA1})/(public-cgi|ssl-cgi-bin)/(.*) “${PKIAppRoot}/PKIServ/$2/$3”

<LocationMatch “¬/(${CA1})/clientauth-cgi-bin/auth/pkicmp”>

CharsetOptions NoTranslateRequestBodies

</LocationMatch>

<LocationMatch “¬/(${CA1})/ssl-cgi-bin(/(auth|surrogateauth))?/cagetcert.rexx”>

Charsetoptions TranslateAllMimeTypes

</LocationMatch>

<IfDefine>

#End of definitions for CA1

Grouping the statements for a CA in one place means it is very easy to change it to use multiple CA’s, just repeat the section between <IfDefine…> and</IfDefine> and change CA1 to CA2.

The third part has definitions for controlling access to a directory. I added more some security information, and changed $$CLIENT$$ to %%CLIENT%%. This is a subset of the file, for illustration

# The User will be prompted to enter a RACF User ID

#and password and will use the same RACF User ID

# and password to access files in this directory

<Directory ${PKIAppRoot}/PKIServ/ssl-cgi-bin/auth>

AuthName AuthenticatedUser

AuthType Basic

AuthBasicProvider saf

Require valid-user

#Users must have access to the SAF APPLID to work

# ZZZ in my case

# it defaults to OMVSAPPL

<IfDefine PKISAFAPPL>

SAFAPPLID ${PKISAFAPPL}

</IfDefine>

# IBM Provided has $$CLIENT$$ where it should have %%CLIENT%%

# SAFRunAs $$CLIENT$$

# The following says use certificate if available else prompt for

# userid and password

SAFRunAs %%CERTIF%%

</Directory>…

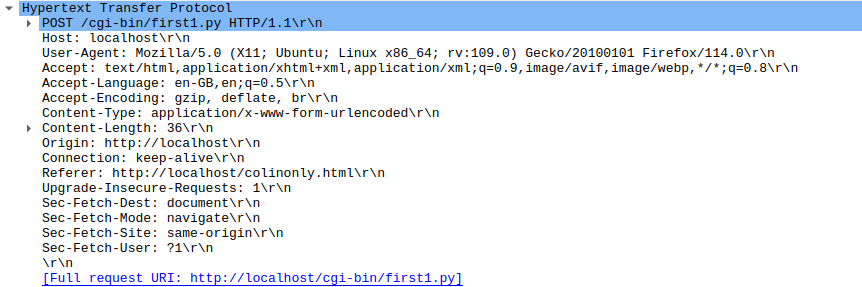

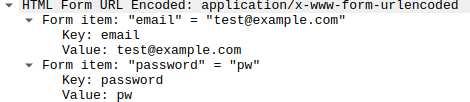

Debugging hints and tips

I spent a lot of time investigating problems, and getting the definitions right.

Whenever I made a change, I used

s COLWEB,action=’restart’

to cause the running instance of HTTPD server to stop and restart. Any errors in the configuration are reported in the job which has the action=’restart’. It is easy to overlook configuration problems, and then spend time wondering why your change has not been picked up.

I edited the envvars file, and added code to rename and delete logs. For example rm z443.log.save, and mv z443.log z443.log.save .

I found it useful to have

<VirtualHost *:443>

DocumentRoot “${pkidr}”

ErrorLog “${PKILOG}/z443.log

ErrorLogFormat “${elf}”

LogLevel Warn

Where

- Error logs is where the logs for this virtual host (port 443) are stored. I like to have one per port.

- The format is defined in the variable Define elf “[%{c}t] %E: %M” in the pki.conf file. The c is compact time (2021-11-27 17:19:09). If you use %{cu}t you also get microseconds. I could not find where you just get the time, and no date.

- LogLevel Warn. When trying to debug the RewriteRule and ScriptAlias I used LogLevel trace6. I also used LogLevel Debug authz_core_module:Trace6 which sets the default to Debug, but the authorization checking to Trace6.

With LogLevel Debug, I got a lot of good TLS diagnostics

Validating ciphers for server: S0W1, port: 443

No ciphers enabled for SSLV2

SSL0320I: Using SSLv3,TLSv1.0,TLSv1.1,TLSv1.2,TLSv1.3 Cipher: TLS_RSA_WITH_AES_128_GCM_SHA256(9C)

…

TLSv10 disabled, not setting ciphers

TLSv11 disabled, not setting ciphers

TLSv13 disabled, not setting ciphers

env_init entry (generation 2)

VirtualHost S0W1:443 is the default and only vhost

Then for each web session

Cert Body Len: 872

Serial Number: 02:63

Distinguished name CN=secp256r1,O=cpwebuser,C=GB

Country: GB

Organization: cpwebuser

Common Name: secp256r1

Issuer’s Distinguished Name: CN=SSCA256,OU=CA,O=SSS,C=GB

Issuer’s Country: GB

Issuer’s Organization: SSS

Issuer’s Organization Unit: CA

Issuer’s Common Name: SSCA256

[500865c0f0] SSL2002I: Session ID: A…AAE= (new)

[500865c0f0] [33620012] Peer certificate: DN [CN=secp256r1,O=cpwebuser,C=GB], SN [02:63], Issuer [CN=SSCA256,OU=CA,O=SSS,C=GB]

With LogLevel Trace6 I got information about the RewriteRule, for example we can see /Customers/EU was mapped to /usr/lpp/pkiserv/PKIServ/ssl-cgi-bin/camain.rexx

applying pattern ‘¬/(PKIServ|Customers)/clientauth-cgi/(.*)’ to uri ‘/Customers/EU’

AH01626: authorization result of Require all granted: granted

AH01626: authorization result of : granted

should_translate_request: r->handler=cgi-script r->uri=/Customers/EU r->filename=/usr/lpp/pkiserv/PKIServ/ssl-cgi-bin/camain.rexx dcpath=/

uri: /Customers/EU file: /usr/lpp/pkiserv/PKIServ/ssl-cgi-bin/camain.rexx method: 0 imt: (unknown) flags: 00 IBM-1047->ISO8859-1

# and the output

Headers from script ‘camain.rexx’:

Status: 200 OK

Status line from script ‘camain.rexx’: 200 OK

Content-Type: text/html

X-Frame-Options: SAMEORIGIN

Cache-Control: no-store, no-cache