On my little z/OS system, one address space was using a lot of CPU – but doing nothing. What was going on? The address space was a z/OSMF based on the Liberty Web server.

The blog post tells you how to take a dump, and use IPCS to display useful information from the system trace. The system trace contains deep down information like

- Task A was dispatched on this processor at this time.

- It issued a request MVS to get a block of storage, and time.

- The request completed, with this return code, at this time.

- Task A was interupted at this time

- Task B was dispatched

There is a lot of detailed information, and it is overwhelming when you first look at it. This blog post shows how you can get summary information from the trace – while ignoring all of the detailed, scary stuff. It does not require any prior knowledge of IPCS or dumps.

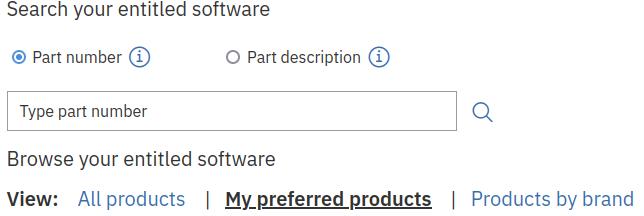

- Take your dump

- Go into IPCS

- Systrace output

- Summary of the dump

- Summary of CPU usage by engine

- Summary of the CPU over all engines

- CPU breakdown by jobname

- Breakdown by jobname and system threads(SRB)

- Breakdown by jobname and application thread(TCB)

- Lock usage

- Local lock (address space lock)

- Timer interrupts

- I/O Activity device and response time

- Advanced topics

Take your dump

DUMP COMM=(COLINS DUMP)

R xx,jobname=IZUSVR1

This gives output like

IEE600I REPLY TO 01 IS;JOBNAME=IZUSVR1

IEA045I AN SVC DUMP HAS STARTED AT TIME=16.24.56 DATE=06/21/2021 044

FOR ASID (0044) QUIESCE = YES

…

IEA611I COMPLETE DUMP ON SYS1.S0W1.Z24A.DMP00004

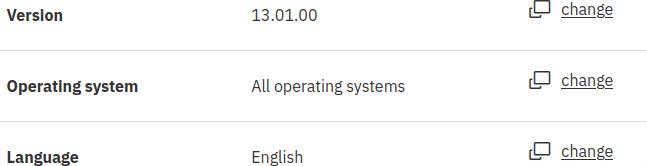

Go into IPCS

I find it easier to use a wide(132) screen for IPCS.

This may be in your ISPF panels, or you might need to issue a command before starting ISPF. You might need to talk to your system programmer.

You get the primary menu

------------------- z/OS 02.04.00 IPCS PRIMARY OPTION MENU

OPTION ===>

0 DEFAULTS - Specify default dump and options

1 BROWSE - Browse dump data set

2 ANALYSIS - Analyze dump contents

3 UTILITY - Perform utility functions

4 INVENTORY - Inventory of problem data

5 SUBMIT - Submit problem analysis job to batch

6 COMMAND - Enter subcommand, CLIST or REXX exec

T TUTORIAL - Learn how to use the IPCS dialog

X EXIT - Terminate using log and list defaults

Select option 0

------------------------- IPCS Default Values ---------------------------------

Command ===>

You may change any of the defaults listed below. The defaults shown before

any changes are LOCAL. Change scope to GLOBAL to display global defaults.

Scope ==> LOCAL (LOCAL, GLOBAL, or BOTH)

If you change the Source default, IPCS will display the current default

Address Space for the new source and will ignore any data entered in

the Address Space field.

Source ==> DSNAME('SYS1.CTRACE1')

Address Space ==> RBA

Message Routing ==> NOPRINT TERMINAL NOPDS

Message Control ==> CONFIRM VERIFY FLAG(WARNING)

Display Content ==> NOMACHINE REMARK REQUEST NOSTORAGE SYMBOL ALIGN

Press ENTER to update defaults.

Use the END command to exit without an update.

Replace the source with DSN(‘your dumpname’).

Change Scope from LOCAL to BOTH

Press enter to update. Use =6 on the command line to get to the IPCS command window.

Enter a free-form IPCS subcommand or a CLIST or REXX exec invocation below:

===>

----------------------- IPCS Subcommands and Abbreviations -----------------

ADDDUMP | DROPDUMP, DROPD | LISTDUMP, LDMP | RENUM, REN

ANALYZE | DROPMAP, DROPM | LISTMAP, LMAP | RUNCHAIN, RUNC

ARCHECK | DROPSYM, DROPS | LISTSYM, LSYM | SCAN

ASCBEXIT, ASCBX | EPTRACE | LISTUCB, LISTU | SELECT

ASMCHECK, ASMK | EQUATE, EQU, EQ | LITERAL | SETDEF, SETD

CBFORMAT, CBF | FIND, F | LPAMAP | STACK

CBSTAT | FINDMOD, FMOD | MERGE | STATUS, ST

CLOSE | FINDUCB, FINDU | NAME | SUMMARY, SUMM

COPYDDIR | GTFTRACE, GTF | NAMETOKN | SYSTRACE

COPYDUMP | INTEGER | NOTE, N | TCBEXIT, TCBX

COPYTRC | IPCS HELP, H | OPEN | VERBEXIT, VERBX

CTRACE | LIST, L | PROFILE, PROF | WHERE, W

If you use the command “systrace” you will see the scary internal trace. PF3 out of it.

Use the command

systrace jobname(IZUSVR1) PERFDATA(DOWHERE) time(LOCAL)

Go to the bottom of the report ( type m and press PF8) and type

REPORT VIEW

This gives you the report in an editor session, so you can exclude, delete, sort count etc.

This gives a lot of data. It is in sections, the sections are…FLocal

Summary of the dump

Analysis from 06/21/2021 16:24:46.391102 to 16:24:56.042146 9.651044 seconds

This gives the time of day, and the interval of the trace is 9.65 seconds.

Summary of CPU usage by engine

CPU Type Pol Park SRB Time TCB Time Idle Time

---- ---- ---- ---- --------------------- --------------------- ---------------------

0000 CP High No 0.190562 1.974% 0.828988 8.589% 8.603271 89.143%

0001 CP High No 0.098836 1.024% 0.393259 4.074% 9.143735 94.743%

0002 CP High No 0.086573 0.897% 0.415063 4.300% 9.136385 94.667%

0003 zIIP High No 0.015463 0.160% 2.227832 23.083% 7.398707 76.662%

0004 zIIP High No 0.000000 0.000% 1.094373 11.339% 8.551280 88.604%

---- ---- ---- ---- --------------------- --------------------- ---------------------

0.391434 4.959518 42.833380

This shows

- Most of the time was spent in TCB “application thread” mode (4.959 seconds of CPU) rather than SRB “system thread” mode (0.391 seconds of CPU).

- One ZIIP was busy 23 % of the time, the other ZIIP was busy 11 % of the time.

Summary of CPU overall over 5 engines

SRB time : 0.391434

TCB time : 4.959518

Idle time : 42.833380

CPU Overhead : 0.070886

------------

Total : 48.255220

This summarises the data

- 4.95 seconds of CPU in TCB mode in 9.65 seconds of trace

- 42 seconds idle

- 5 engines * 9.65 seconds duration = 48.25

CPU break down by ASID/Jobname

CPU breakdown by ASID:

ASID Jobname SRB Time TCB Time Total Time

---- -------- ------------ ------------ ------------

0001 *MASTER* 0.011086 0.017940 0.029027

0003 RASP 0.000186 0.000000 0.000186

0005 DUMPSRV 0.035545 0.008959 0.044504

0006 XCFAS 0.021590 0.074411 0.096001

...

0044 IZUSVR1 0.021217 3.638295 3.659513

0045 COLIN 0.000000 0.000000 0.000000

0046 RMF 0.010238 0.020204 0.030442

0047 RMFGAT 0.019961 0.160512 0.180474

------------ ------------ ------------

0.391434 4.959518 5.350953

Most of the CPU was in ASID 44 for job IZUSVR1.

Breakdown by system thread (SRB) by address space/jobname

SRB breakdown by ASID: (WHERE command bypassed for CPU usage less than 0.100000):

ASID: 0001 Jobname: *MASTER*

IASN SRB PSW # of SRBs Time

---- ----------------- --------- ------------

0001 070C0000 83D0E8A0 2 0.000314

...

ASID: 0003 Jobname: RASP

IASN SRB PSW # of SRBs Time

Ignore this unless the SRB usage was high.

Breakdown of CPU by used thread by address space/jobname

TCB breakdown by ASID:

ASID Jobname TCB Adr # of DSPs Time

---- -------- -------- --------- ------------

0001 *MASTER* 008EDE88 1 0.000535

...

ASID Jobname TCB Adr # of DSPs Time

---- -------- -------- --------- ------------

0044 IZUSVR1 008C8E88 22 0.013143

0044 IZUSVR1 008AD7A0 30 0.006694

0044 IZUSVR1 008B97B8 37 0.015926

0044 IZUSVR1 008BA3E8 50 0.017547

0044 IZUSVR1 008B2628 15 0.007748

0044 IZUSVR1 008C4840 19 0.008433

0044 IZUSVR1 008BD2D8 20 0.008551

0044 IZUSVR1 008CDC68 14 0.008107

0044 IZUSVR1 008C8328 15 0.006540

0044 IZUSVR1 008CAC68 16 0.006612

0044 IZUSVR1 008C9E88 14 0.006634

0044 IZUSVR1 008B5C68 14 0.005668

0044 IZUSVR1 008CBBE0 28 0.015650

0044 IZUSVR1 008ADE00 17 0.005861

0044 IZUSVR1 008B9470 15 0.006014

0044 IZUSVR1 008BEA48 17 0.017092

0044 IZUSVR1 008C6CF0 20 0.010093

...

0044 IZUSVR1 008CC2D8 548 0.827491

0044 IZUSVR1 008D2E88 25 0.445230

0044 IZUSVR1 008D2510 819 0.412526

0044 IZUSVR1 008CEE88 14 0.158703

0044 IZUSVR1 008D3E88 8 0.003189

0044 IZUSVR1 008C4CF0 18 0.013237

------------

3.638295

- There were 166 TCB’s which did something in the time period.

- TCB with address 008D2510 was dispatched 819 times times in 9 seconds – using 0.4 seconds of CPU! This was being dispatched 100 times a second, and used 5 milliseconds of CPU on average per dispatch. This looks high considering the system was not doing any work.

- TCB with address 008d2E88 was dispatched 25 times in 9 seconds, and used 0.44 seconds of CPU or 17 ms of CPU per dispatch. This is doing more work per dispatch than the previous TCB.

Display lock usage

Lock events for CEDQ None found Lock events for CSMF None found Lock events for CLAT None found Lock events for CMS None found Lock events for OTHR None found

Nothing of interest here.

Display local lock usage – locking the job

Lock events for LOCL of ASID 0010 OMVS

Lock ASID Jobname TCB/WEB Type PSW Address IASN Suspended at Resumed at Suspend Time

---- ---- -------- -------- ---- ----------------- ---- --------------- --------------- ------------

CML 0044 IZUSVR1 008C33E8 TCB 00000000_04868084 0010 16:24:49.612051 16:24:49.612076 0.000025

CML 0044 IZUSVR1 008B4938 TCB 00000000_048687E4 0010 16:24:49.612090 16:24:49.612570 0.000480

...

---- ---- -------- -------- ---- ----------------- ---- --------------- --------------- ------------

Suspends: 6 Contention Time: 0.000821 0.008% WU Suspend Time: 0.000823

Lock events for LOCL of ASID 0044 IZUSVR1

Lock ASID Jobname TCB/WEB Type PSW Address IASN Suspended at Resumed at Suspend Time

---- ---- -------- -------- ---- ----------------- ---- --------------- --------------- ------------

LOCL 0044 IZUSVR1 008D3E88 TCB 00000000_010CCD62 0044 16:24:46.404417 16:24:46.404561 0.000144

LOCL 0044 IZUSVR1 008D3E88 TCB 00000000_010ADA78 0044 16:24:46.410188 16:24:46.412182 0.001993

Suspends: 83 Contention Time: 0.042103 0.436% WU Suspend Time: 0.079177

The LOCal Lock (LOCL) is the MVS lock used to serialise on the address space, for example updating some MVS control blocks. For example if MVS wants to cancel an address space, it has to get the Local lock, to make sure that critical work completes.

- For the OMVS addess space, address space IZUZVSR1 got the lock 6 times, and was delayed for 0.823 milliseconds waiting for the local lock.

- For the IZUSVR1 address space, 83 TCBs got the local lock, and were suspended for a total of 79 milliseconds.

Display timer events (CPU Clock comparator CLKC and timer TIMR)

ASID: 0044 Jobname: IZUSVR1 SRB/TCB IASN Interrupt PSW Count Where processing -------- ---- -------- -------- -------- ---------------------------------------- 00000000 0044 070C0000 81D83CB8 2 IEANUC01.ASAXMPSR+00 READ/WRITE NUCLEUS ... 008CEE88 0044 078D0401 945F2B28 11 AREA(Subpool252Key00)+CB28 EXTENDED PRIVATE 008CEE88 0044 078D2401 945F99BE 1 AREA(Subpool252Key00)+0139BE EXTENDED PRIVATE 008CEE88 0044 078D2401 FA63F71E 1 SPECIALNAME+03F71E EXTENDED PRIVATE 008CEE88 0044 078D0401 FAB00178 1 SPECIALNAME+0178 EXTENDED PRIVATE 008CEE88 0044 078D2401 FAB447BE 1 SPECIALNAME+0447BE EXTENDED PRIVATE 008CEE88 0044 078D0401 FAD9E660 1 SPECIALNAME+29E660 EXTENDED PRIVATE 008B17B8 0044 070C0000 81C92030 1 IEANUC01.IAXVP+4048 READ ONLY NUCLEUS 008B27C0 0044 072C1001 91AF2460 1 BBGZAFSM+7520 EXTENDED CSA ... 008D2E88 0044 078D0401 945F2B28 22 AREA(Subpool252Key00)+CB28 EXTENDED PRIVATE 008D2E88 0044 078D0401 FB036F08 1 SPECIALNAME+036F08 EXTENDED PRIVATE ... 008D2E88 0044 078D0401 FC145732 1 AREA(Subpool229Key00)+A732 EXTENDED PRIVATE

This displays

- The TCB

- The virtual adress where the interrupt occurred

- These entries are in time sequence, and so we can see the second entry had 11 interupts in quick succession (count is 11).

- The Where processing, is a guess at converting the address into a module name. Sometimes it works, for example Module IEANUC01, csect ASAXMPSR, offset 0. Sometimes it cannot tell, for example from Java code.

This shows 2 things

- The application said wake me up in a certain time period

- The TCB was executing and z/OS interrupted it because it needed to go and dispatch some other work. This gives a clue as to hot spots in the code. If the same address occurs many times – the code was executing here many times. I look in the raw systrace to see if this is a TIMer (boring) or a CLKC interesting. Interesting gives you a profile of what code is CPU intensive.

- You can delete all the records outside of this block, then sort 15 32 to sort on PSW address. For my IPCS dump the address 078D0401 945F2B28 occurred 35 times.

I/O activity

Device SSCH Issued I/O Occurred Duration

------ --------------- --------------- ------------

0A99 16:24:48.009819 16:24:48.010064 0.000244

0A99 16:24:48.033619 16:24:48.033856 0.000236

0A99 16:24:48.051014 16:24:48.051081 0.000066

0A99 16:24:48.057377 16:24:48.057434 0.000056

0A99 16:24:48.080331 16:24:48.080430 0.000098

------------

0.000702

Events for 0A99 : 5

Quickest I/O : 0.000056

Slowest I/O : 0.000244

Total : 0.000702

Average : 0.000140

This says for the device 0A99 there were 5 I/O requests, total time 0.7 milliseconds

- I used the REPORT VIEW to get the data into ISPF edit,

- deleted all the records above the I/O section

- Used X ALL

- F TOTAL ALL

- This shows the totals for all I/Os. Most totals were under 1 ms. One I/O was over 5 seconds.

- Displaying the detailed records above this TOTAL record showed one I/O took over 5 seconds!

End of report

End of PERFDATA analysis.

Advanced topic: Look at hot spots

I had seen that PSW 078D0401 945F2B28 was hot. If you go back to the IPCS command panel, you may be able to use the command

L 945F2B28

To display the storage. This will not work. You have to remove the top bit (80), so

L 145F2B28

may work.

If the first character is a letter (A-F) then you need to put a 0 on the front for example

L 0D2345678

You might need to put the address space in as well for example

L 145F2B28 ASID ID(x’44’)

You can say

L 145F2B28 ASID ID(x’44’) LENGTH(4096)

To display large sections of storage

Dig into the trace

You can use

systrace jobname(izusvr1)tcb(x’008CC2D8′)

to display all entries for the specified TCB and jobname.

- Go to the bottom ( type Max press PF8)

- use the report view command to get into and edit session

- Columns 79 to 88 contain a description of some of the system calls

- use X ALL;f ‘ ‘ 79 84 all;del all nx to delete all lines without a description

This gives you a picture of the MVS services being used.